Deepfakes—digital manipulations created using artificial intelligence (AI)—are making it increasingly difficult to distinguish between what’s real and what’s not. While these AI-generated  creations have transformed industries like entertainment, education, and healthcare, they also present significant risks to businesses.

creations have transformed industries like entertainment, education, and healthcare, they also present significant risks to businesses.

Emerging Threats

Deepfakes imitating public officials can spread disinformation and fake news. Worse, fraudsters can use deepfake images or videos of business owners or executives to facilitate phishing attacks and access confidential information.

The risk isn’t limited to visual content. Audio deepfakes, which replicate a person’s voice, can be used to deceive employees. For instance, a convincing voicemail from a “business partner” might instruct someone in your accounting team to transfer funds to an overseas account.

The Challenge of Detection

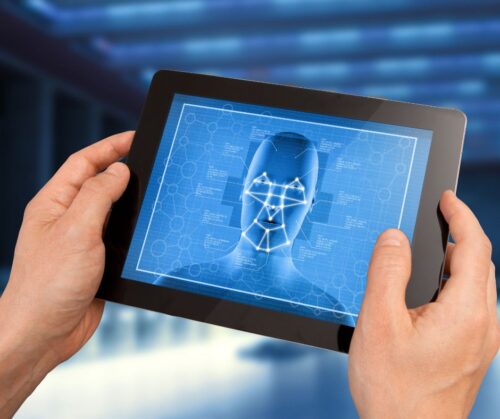

AI tools can detect deepfakes by analyzing anomalies such as unnatural facial movements, awkward body postures, and inconsistent lighting. However, this technology is still developing and isn’t foolproof.

Other approaches, like watermarking, hold promise but are relatively easy to bypass and not yet widely adopted. Legal measures to address deepfakes are growing, but they focus more on penalizing misuse rather than preventing their creation.

Warning Signs to Watch For

Educating your team to recognize deepfake indicators is essential. Be alert for these red flags in video or audio content:

- Unnatural eye movements: Look for odd blinking patterns or inconsistent eye gazes, which are hard for deepfake creators to replicate.

- Unrealistic faces: Watch for mismatched skin tones, strange lighting, blurred edges, or exaggerated expressions.

- Lip-sync and audio discrepancies: Notice if lip movements don’t match the audio or if the tone, emotion, or timing feels unnatural.

- Distorted backgrounds: Be wary of warped objects or backgrounds that blend oddly with the foreground.

- Unbelievable content: Deepfakes often feature sensational or out-of-character behavior.

- Dubious sources: Deepfakes frequently originate from unreliable platforms. Treat viral content with skepticism.

Verifying Content

Until detection technology improves, maintaining a skeptical mindset is your best defense. Always verify video or audio files by cross-checking with credible sources. If employees receive unusual requests via voicemail or video, encourage them to confirm the request directly by phone or in person.

Contact us with questions and for help training your workers to fight malicious deepfakes and other fraud schemes.